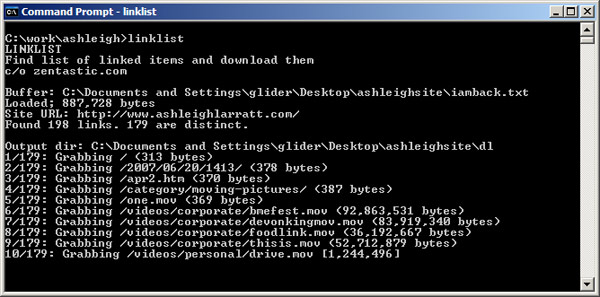

I doubt this will be of immediate use to any readers, but you never know.

I was moving my sister’s blog, and one of the problems was that her previous site had crashed, and her old host was uncooperative in giving her FTP access to download it. Luckily most of the files were linked from another blog, so I had many of their URLs. What I had was a text/html file that contained the links, so I wrote a utility that goes through an html file and finds all the links off a given URL, and then downloads those files to a local directory.

Usage… you’ll be asked for a bunch of different things:

- Input File – This is the local file that contains the links. It’ll use all links enclosed in single or double quotes (ie. links, images, everything) and download all types of files. Up to 5,000 distinct links can be done. Example: C:\Documents and Settings\billy\Desktop\harvested.html

- Link Site – This is the URL to look for links from. For example: https://zentastic.me/

- Output Directory – This is the local directory that the links should be saved to. For example: C:\Documents and Settings\billy\Desktop\dl

It’s pretty simple to use. It will do larger files, but on anything larger than a couple meg it’s pretty slow. If someone needs a version that does more files or larger files, wants a GUI, or needs custom web-harvesting applications, drop me a line. They only take me a few minutes to customize.

Download: linklist.exe (35k)

7 Comments

Any mention of you writing code makes me hot and bothered. It may be that I have too many layers. :P

Sorry for being off topic, Shannon, but I was really hoping to read your thoughts on Obama’s inauguration. I’m sure I’m not alone in missing your political posts. Will you humor us?

#2, I second that. Pretty please.

you should look at wget, which i’m pretty sure will run under cygwin

(snipped from the wget manpage):

…Wget can follow links in HTML and XHTML pages and create local versions of remote web sites, fully recreating the directory structure of the original site. This is sometimes referred to as “recursive downloading.” While doing that, Wget respects the Robot Exclusion Standard (/robots.txt). Wget can be instructed to convert the links in downloaded HTML files to the local files for offline viewing…

Yes, there’s significant overlap between this and ‘wget’.

I normally would use wget.

why are you not using wget?

what is wrong with wget?

wget isn’t natively for win32, and this is streamlined and simple to use for the application I needed… this is much easier/effective for the given purpose.

Post a Comment